Training the Aurora Driver to detect and respond to dangerous road conditions caused by inclement weather

Texas is no stranger to tempestuous weather—just a few months ago, I was hunkering down with some of our vehicle operators as a thunderstorm wiped through Dallas. The state’s occasional snow, sleet, or rain storms are typically short and intense, and can sometimes be accompanied by howling winds.

Like all trucking ecosystem businesses, we work with our customers to plan around such weather events and schedule our hauls for clear conditions. However, there’s always a chance of sudden, unexpected inclement weather, and our autonomous trucks have to be able to react appropriately when it happens.

As outlined in our Aurora Driver Beta 6.0 release, the Aurora Driver will slow and proceed with caution when it encounters weather such as rain, snow, or fog that affects visibility. This also applies to other situations in which the Aurora Driver’s perception is unexpectedly hampered by external factors such as dust, smoke, and even insects.

However, driving in extreme weather is never a good idea. When the road is obscured or slippery, accidents are more likely to happen—in fact, according to the U.S. Department of Transportation, approximately 21% of crashes occur in adverse weather. So when conditions become bad enough that the Aurora Driver can no longer safely and confidently drive through them even at reduced speed, it will begin searching for a safe place to stop and will alert the Command Center.

These maneuvers and the decision-making that triggers them rely on accurate and reliable detection and mitigation of harsh weather conditions.

Inclement weather often means reduced visibility

When we launch Aurora Horizon, our autonomous trucking service, our vehicles will be exposed to a wide variety of elements that may foul the sensors. If you’ve ever tried taking a photo in the rain or fog, you’ve probably noticed that the images come out lacking saturation, definition, and contrast—especially when water gets on the camera lens. The same holds true for the cameras and lidar that sit in the long-range sensor pods atop our trucks.

As moisture builds on the surface of the camera lens, the image begins to blur. Bright lights can become distorted and sometimes appear larger than they actually are.

Camera data shows a world that is slightly blurry and grey due to the rain. In the opposite lane, we can see the headlights of another truck reflected and superimposed over part of the grass divider.

Camera data shows a world that is slightly blurry and grey due to the rain. In the opposite lane, we can see the headlights of another truck reflected and superimposed over part of the grass divider.

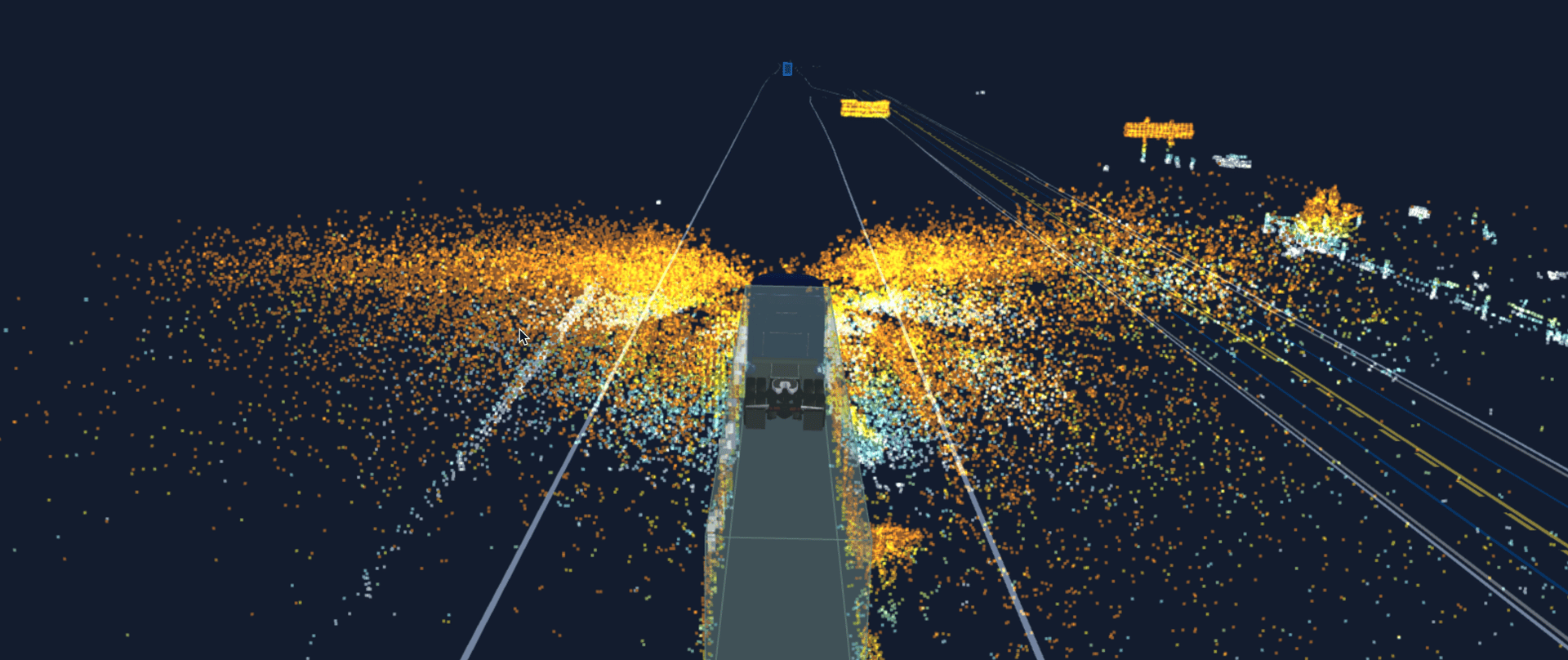

Light from our lidar sensors can bounce off of large particles in the air, such as raindrops or snowflakes, creating what can appear to be a cloud surrounding our truck.

Lidar sensors emit light that bounces off of surrounding objects. When it’s raining or snowing, light from our lidar sensors can bounce off of rain droplets or snowflakes in the air, creating what can appear to be a cloud hovering around our truck. When perception is impaired, like in this example from our training dataset, the Aurora Driver will alert the command center and find a safe place to pull over.

Lidar sensors emit light that bounces off of surrounding objects. When it’s raining or snowing, light from our lidar sensors can bounce off of rain droplets or snowflakes in the air, creating what can appear to be a cloud hovering around our truck. When perception is impaired, like in this example from our training dataset, the Aurora Driver will alert the command center and find a safe place to pull over.

When dust, dirt, or other solid particles land on a sensor lens, they may block parts of a sensor’s field of view. In extreme cases, ice, snow, or other debris may completely obscure a sensor.

A camera lens covered in ice, snow, or other solid particles is unusable until cleaned. In this example, a camera lens is blocked by ice, making it almost impossible to see the road.

A camera lens covered in ice, snow, or other solid particles is unusable until cleaned. In this example, a camera lens is blocked by ice, making it almost impossible to see the road.

Our sensor cleaning solution

To keep our sensors operating at peak perception, we designed an innovative cleaning system that prevents moisture and debris from building up on our sensors’ lenses.

These two images, taken moments apart, show the before- and after-cleaning states of the Aurora Driver’s camera vision in light rain. The image on the top is slightly blurry and desaturated due to moisture accumulating on the camera lens. On the bottom is the same scene after the camera lens has been cleaned, displaying a sharper, more saturated, and higher contrast image.

These two images, taken moments apart, show the before- and after-cleaning states of the Aurora Driver’s camera vision in light rain. The image on the top is slightly blurry and desaturated due to moisture accumulating on the camera lens. On the bottom is the same scene after the camera lens has been cleaned, displaying a sharper, more saturated, and higher contrast image.

Each of our sensors is equipped with special nozzles that blast a combination of high-pressure air and washer fluid across their surface—completely cleaning each lens in milliseconds.

Close-up of our sensor cleaning system clearing water from the surface of a camera lens.

Close-up of our sensor cleaning system clearing water from the surface of a camera lens.

Putting safety first

The Aurora Driver’s perception system is constantly assessing the range and quality of the data its sensors record. Based on these assessments, the Aurora Driver contacts the Command Center and determines how to respond to the conditions. In clear weather, the decision is typically to continue at normal speed. If conditions are degraded too much for normal driving, the Aurora Driver will slow down to a reduced speed. In severe cases, the Aurora Driver will find a safe location to pull over and wait out the storm.

We prioritize the safe operation of our technology and the safety of other road users. That’s why we’ve designed the Aurora Driver to emulate the best human truck drivers and act conservatively when environmental conditions pose safety risks. When necessitated by harsh environmental conditions, a brief pause in the mission to wait out a sudden storm is safer, and therefore more desirable, than risking the lives of other road users.

As we expand our operations beyond our Dallas-Houston launch lane, we will continue to train the Aurora Driver to handle the different types of inclement weather it may encounter.

Senior Staff TLM, Sensor Perception, Software Engineering