Defining Progress in 2020

From our leaders | January 24, 2020 | 4 min. read

By Chris Urmson

Earlier this month, I wrote about what we’re working to deliver at Aurora this year. These priorities — advancing our core technology, securing a path to profitability, integrating the Aurora Driver into trucks, and continuing to develop a safety case — will get us closer to reaching our ultimate goal: developing self-driving technology that can safely move people and goods without the need of a human driver. However, before we can achieve this goal, we need to answer an important question: “What is the right way to measure progress, both at Aurora and in our industry?”

Historically, the industry and media have turned to tallying on-road miles and calculating disengagement rates as measurements of progress. As we pointed out earlier in the year, these numbers mean little when a) there’s no clear definition of what constitutes a disengagement in the industry and b) we’re constantly developing new capabilities and testing in more difficult environments. If we drive 100 million miles in a flat, dry area where there are no other vehicles or people, and few intersections, is our “disengagement rate” really comparable to driving 100 miles in a busy and complex city like Pittsburgh?

With that in mind, I’d like to share how we’re approaching defining progress at Aurora and how we think the conversation around progress will evolve in the industry and media.

How we define progress at Aurora

At Aurora, we measure our progress by technical or engineering velocity, which we can think of as progress made on core technology — not joint-ventures, pilots, financial valuations, or on-road miles driven. As we inch toward our ultimate goal of safely and efficiently transporting people and goods, we define meaningful progress as year-over-year improvement with the following key concepts in mind.

We don’t keep repeating the same mistakes.

Not repeating the same mistakes means that once we see an issue — whether it’s disengaging for a reason or simply driving we don’t like — we dig in to understand it, and then figure out how we’re going to fix it. We may see the same thing in the field again, but we won’t be surprised by it again — and having multiple instances may ultimately help us characterize the issue so we can fix it. When it comes to Disengagements, we learn from two main types:

-

Reactionary Disengagements: These are situations where a vehicle operator disengages the Aurora Driver because they believed there was a chance that an unsafe situation might occur, or they didn’t like how the vehicle was driving. For example, if the Aurora Driver planned to conduct a lane change at an inappropriate time, the vehicle operator would quickly disengage.

-

Policy Disengagements: Vehicle operators proactively disengage anytime the Aurora Driver faces an on-road situation that the Aurora Driver hasn’t been taught to handle yet. For example, we used to disengage before unprotected left-hand turns, and now we don’t because the Aurora Driver can handle those situations safely and smoothly.

We know we’re making progress because we’re constantly retiring or refining Policy Disengagements. We’re also passing virtual tests inspired by Reactionary Disengagements. We’ve built pipelines that allow us to continuously label, evaluate, and make virtual tests from scenarios we see on the road. When new versions of the software can pass those tests, we know they’re performing better than their predecessors.

We’re able to perform new, harder maneuvers in more complex areas.

In 2019, we focused on capabilities including merging, nudging, and unprotected left-hand turns. And now the Aurora Driver can perform each seamlessly, even in dense urban environments. In 2020, for example, we’ll continue to improve how we predict and account for what we call “non-compliant actors.” These are people who aren’t following the rules of the road, such as jay-walkers and drivers who might aggressively cut us off. We can’t assume everyone on the road is a calm and rational driver, so we need to double down on these cases.

How we make progress as an industry

On-road miles driven in autonomy and disengagement rates — which will soon be made public in the California Disengagement report — are rapidly losing stock in the conversation around how we should measure progress. So, having a distinctive definition of progress is paramount and timely.

"To that end, we think we’ll see the industry play catch-up by doing two specific things in 2020 that we’ve been doing since day one."

-

We’ll see major investments in Virtual Testing.

-

We’ll see companies squeeze more “value” out of their on-road miles.

Virtual Testing

Instead of racking up empty miles on the road, we’ve prioritized the investment in our robust Virtual Testing Suite, which allows us to run millions of valuable off-road tests a day. We base virtual tests around interactions — stopping for pedestrians, finding a slot to merge, nudging around parked cars — so every virtual mile is incredibly interesting and valuable. Because of this, we estimate that just a single virtual mile can be just as insightful as 1,000 miles collected on the open road.

Our competitors understand this value proposition too, as several of our peers have recently acquired simulation companies. In 2020, we anticipate they will continue to play catch up with virtual testing tools through acquisitions and development of internal programs.

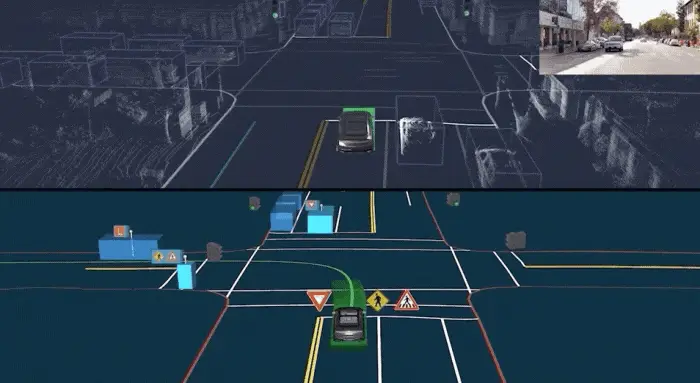

We used log data from real-world events like this unprotected left turn (top) to inspire new simulations (example on bottom).

Making Every Mile on the Road Count

One persistent misconception in the self-driving space is that the team with the most on-road development miles will “win”. We disagree. Our goal has always been to scale our data pipelines so that we collect useful data. Quality is more important than quantity in on-road testing, and we try to make every mile count.

We’ve built an infrastructure that optimizes the value of the data that we collect on-road. As we’ve said, we routinely use real-world scenarios to inspire new and more lifelike offline testing scenarios. We also feed examples of good and not-so-good driving decisions back into our motion planning models, allowing the Aurora Driver to continue to learn from on-road experiences.

In 2020, we expect to see a deeper emphasis on the quality of on-road miles collected and how self-driving companies leverage that data effectively to make progress.

We look forward to expanding our vehicle fleets for meaningful and novel data-collection, testing, and validation in 2020. In the near future, I’ll be sharing more thoughts on how we measure progress now that we’ve defined it, along with other industry predictions around trucking, business models, and more.

Delivering the benefits of self-driving technology safely, quickly, and broadly.