Perception at Aurora: No measurement left behind

November 14, 2021 | 4 min. read

How we’re blending state-of-the-art machine learning and engineering in our perception system to help the Aurora Driver handle the unexpected

Imagine you’re driving on the highway when something tumbles out of a flatbed truck in front of you. You can’t quite see what it is, but you can already tell it might end up in the middle of your lane. What would you do?

Situations like this are particularly challenging for self-driving vehicles, largely because perception systems can struggle to categorize objects they haven’t seen before. At Aurora, we addressed this issue by designing our system to track objects it doesn’t recognize. We call our approach “No measurement left behind,” and it’s one of the ways we ensure our vehicles drive safely.

Machine learning in perception

Our perception system is responsible for “seeing” and recognizing important objects that could affect the Aurora Driver. For nearly two decades, credible approaches to hard perception problems have been deeply rooted in machine learning. Our perception team has decades of combined experience in machine learning techniques for lidar, radar, and video–both in research and in fielded robotic systems.

Machine learning models excel at quickly making sense of complex data, so they’re ideal for identifying pedestrians, bicyclists, and other vehicles from massive amounts of sensor inputs in real-time. These models learn how to identify objects of interest from labeled examples (e.g., cyclists at various angles), so we prioritize gathering and producing high-quality labels.

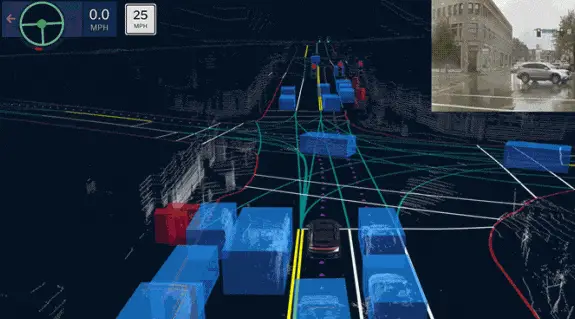

In this clip, our perception system was able to identify and accurately determine the location and extents of the parked vehicles and the oncoming vehicle. Our motion planning system used this information to determine when it was appropriate to nudge around the parked vehicles and continue on its route.

The performance of machine learning models is typically measured by analyzing:

-

Recall: the percentage of objects that the system can correctly detect. For example, if there are nine pedestrians on the road and a system only sees eight of them, the recall of that system for pedestrians is 8/9, or about 89%.

-

Precision: the percentage of important objects the system can label correctly. For example, in a scene with five parked cars, a system sees six, but one of them is really a dumpster. In this case, the precision for parked vehicles is 5/6, or about 83%.

Both are critical for self-driving. If there’s low recall, the system might not see something important, which could be a serious safety concern. With low precision, passengers might be subjected to unnecessary maneuvers that make the ride uncomfortable and also present safety concerns. For example, if the system mislabels a cloud of exhaust as an obstacle, the vehicle might suddenly stop.

The long-tail problem

We improve precision by continually fine-tuning the ability of our machine learning models to classify objects. But even if they could perfectly identify all of the more common objects (bicyclists, pedestrians, buses, etc.), it’s virtually impossible to train the system on every unique object that can occur on the road. That means even the most advanced perception systems may encounter things they simply don’t recognize. Trailers pulling stacks of antique living room furniture and boxes falling off the back of a truck–these are just some of the many possible situations that could confuse an otherwise well-trained model.

The occurrence of random, infrequent, and unique road events is called the long-tail phenomenon, and it’s one of the largest hurdles in perception. Systems that aren’t designed to handle the long-tail phenomenon could ignore or misclassify unusual data. This is not a risk we’re willing to accept.

No measurement left behind

To account for the long-tail phenomenon, we developed our perception system with the mantra: No measurement left behind.

Essentially, we designed our system to ensure that all of our sensor measurements have an explanation. We do this by detecting and tracking all of the object types we understand, including known obscurants (e.g., exhaust), sensor artifacts, and parts of the static world. Then, we don’t just ignore what’s left over. We explain remaining data by tagging it as one or more generic objects, and if those objects are moving, we track them. This involves combining state-of-the-art machine learning with advanced state estimation to predict each object’s trajectory without knowing what it is.

This improves safety because when the Aurora Driver knows something is there, it can react (e.g., slowing down). Further, it’s designed to approach unknown objects cautiously, much like a human driver would. You can see this in the video below, which shows how the Driver reacts when a box suddenly falls out of the back of a pickup truck.

Notice that the environment in the video contains a series of cuboids. The colorful ones correspond to objects our perception system recognizes: other vehicles (blue) and pedestrians (red). The gray ones correspond to objects that are a) static scenery or b) what our system has deemed generic moving objects. When the box falls, it’s immediately tagged with tiny gray cuboids, meaning our perception system saw it, couldn’t place it in an existing category, and still tagged it as a generic object. Perception then reports the box’s current and estimated future position to the motion planner, which instructs the vehicle to stop.

The Aurora Driver reacts to a box falling out of the back of a truck. The perception system tags the box as a generic object and the Driver stops.

Strategically applying machine learning

"No measurement left behind" allows us to leverage the strengths of machine learning in perception without needing to understand and recognize all object types. This maximizes our ability to drive safely in a complex world and reflects our broader development philosophy. We believe machine learning is a critical tool, but we also acknowledge that machine learning alone is not enough to create a scalable and robust Driver. That’s why we routinely combine learning with rigorous engineering in areas like perception, state estimation, reliable infrastructure, and decision-making. In future posts, we’ll discuss this in more depth and provide more examples of how we’re strategically combining learning and engineering in the Aurora Driver.

We’re hiring perception engineers to help us develop solutions like these. Visit our Careers page to learn more!

Delivering the benefits of self-driving technology safely, quickly, and broadly.